Oxfordshire Mobility Model: MIMAS project update – March 2021

Deploying MIMAS in the cloud

MIMAS is a cloud-based transport modelling solution, developed for Oxfordshire County Council by a consortium of individual companies, each bringing specialized expertise, and each being responsible for separate components of the system. Previous articles have described some of those components in detail; this article aims to explain how the overall solution is deployed using Amazon Web Services (AWS), what features and services we are making use of, and why the cloud approach suits the architecture, the development, and the operation of the solution.

Solution architecture

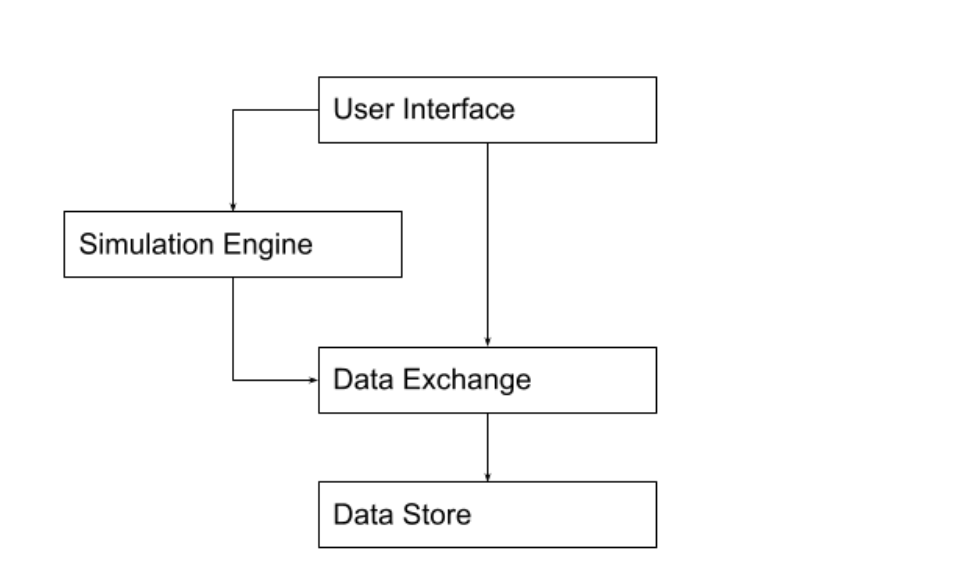

The MIMAS solution consists of a number of components, with a different consortium partner responsible for each one.

The User Interface has been developed by Oxford Computer Consultants and the Simulation Engine by Immense Simulations. Alchera Technologies are responsible for the Data Exchange, and GeoSpock are providing the Data Store.

The components communicate through specialised APIs and other custom-built interfaces. A great deal of collaboration has naturally gone into designing those interfaces, but once these are agreed, the modular approach to the architecture has allowed the partners to develop their components relatively independently.

The decentralisation of responsibilities extends beyond the lines of code, however. The philosophy applies as much to the deployment process and the choice of cloud infrastructure, as it does to the development of the software. Partners have the freedom to choose the AWS services and deployment model which is right for the requirements of their component. We’ll see below how AWS allows for components to be deployed independently without risk of interference for others.

The User Interface

We provided a description of the User Interface in a previous update. There are two aspects to the UI – the ‘What-if’ scenario editor, and the visualisation – which allows users to explore and compare the results of running simulations under different scenarios. This, of course, is the component of the system that users interact with directly. It has been developed as a Javascript web application which runs in the user’s browser.

It is a natural choice to use AWS CloudFront here. Amongst other things, CloudFront allows you to turn an S3 bucket into a web server, presenting the files in your bucket as a website. This is ideal for a Javascript web client application, where the web page, running in the browser, is functional without additional code running on the server. Deploying to CloudFront is very simple, requiring a small amount of configuration and an upload of the website files to your S3 bucket.

The Simulation Engine

When a user submits a scenario, it is sent off to the simulation engine. You can read about the Data Model that drives the simulation engine in another previous update.

The Simulation Engine, as the name suggests, is the component of the platform responsible for estimating how people travel within and across the County using simulation. Our engine runs on cloud compute in AWS, specifically EC2 instances that are automatically sized based on the simulation being requested. Our engine communicates with other components of the platform such as the UI and Data Exchange via RESTful APIs. We use a combination of AWS storage solutions across relational and nosql databases as well as file repositories in the form of S3 to hold simulation configurations and digital representations of the transport networks we simulate.

The Data Exchange

The main role of the Data Exchange is to process the results of the simulations when they are ready, and to serve those results back to the UI when they are called for. The Data Exchange not only bridges data flows between the other components but also performs access control and logging and enforces data schemas.

The Data Exchange runs on EKS which greatly reduces headaches of maintaining and securing a Kubernetes cluster providing massive scalability off the back of EC2. It also leverages RDS PostgreSQL for short term storage of processed data and metadata.

The Data Store

The Data Store is responsible for the long-term storage of the data generated by the system. The results for every simulation that is run, along with any network changes under the corresponding scenario, are stored here.

The Data Store product has been built around several AWS services. At the heart of the Data Store is S3 – used for storing the data – and EMR – used both for efficient data ingest and for optimised retrieval of data through SQL. Supporting services make use of Elastic Beanstalk and EC2, with RDS Aurora and DynamoDB for persisting metadata. The Data Store communicates with the rest of the system using EventBridge to push updates to an SNS topic to which the Data Exchange is subscribed.

Cross-cutting concerns

While the modular approach to the MIMAS architecture allows consortium members to develop and deploy their components independently, there are aspects to the design that, for a cohesive solution, must run through the whole system. It is here that AWS comes to the fore, providing the frameworks to glue the solution together

Authentication and Authorisation

Controlling who has access to which information is an important aspect for any web application. AWS Cognito is well placed to manage authentication and authorisation within MIMAS. Generated user tokens can be passed between components, so that a user’s identity can be verified and operations authorised at the appropriate level.

System Health, Audit and Monitoring

Monitoring for system health and audit are other aspects that need to run through the whole system. AWS CloudWatch allows for logging and for the construction of centralised dashboards to give a holistic view of system health.

AWS account governance

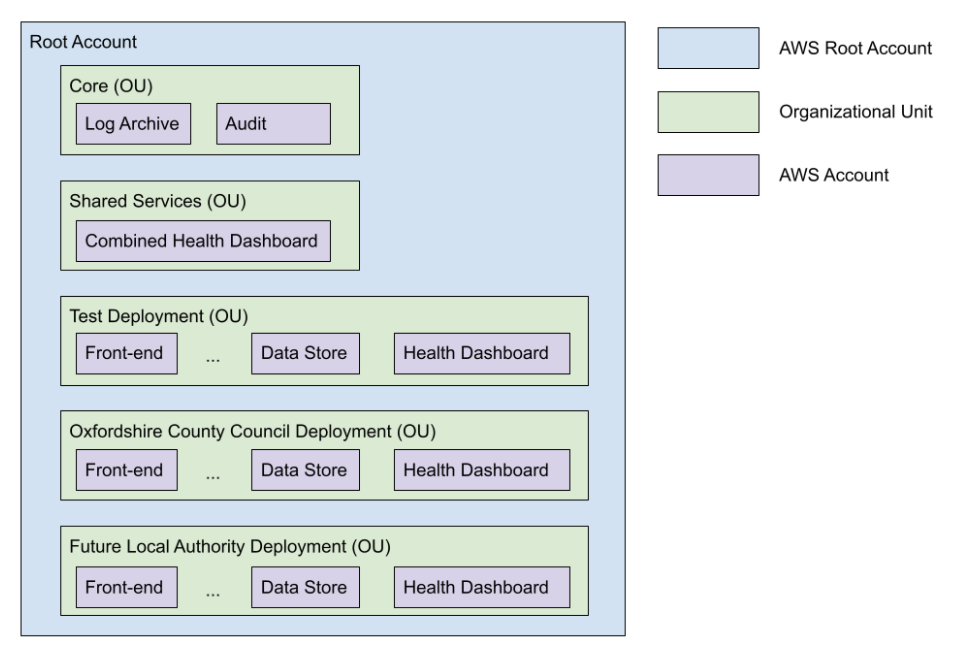

As we have seen, delegating responsibility for deployment as well as development of the individual components to the respective partners comes with many advantages. One potential pitfall, however, is that several agents need administrative access to AWS in order to deploy and administer their components. The solution to this is to create an AWS Organization with multiple sub-accounts and to deploy the individual components into the sub-accounts. Individuals then only need administrative access on the accounts they are responsible for, reducing the risk of treading on the toes of other partners.

AWS Control Tower is an excellent tool that reduces the administrative burden of creating and setting up governance on several accounts. It allows for global policies to be set up and applied to all accounts, and makes it easy to set appropriate access on each of them.

Future-proof account structure

AWS Control Tower can also be used in conjunction with Organizational Units to arrange accounts in a logical structure, and administer them in groups. We have adopted a model for organising accounts which will support growth as the solution is adopted by more Local Authorities.

Latest news

Oxfordshire Mobility Model: MIMAS project update – April 2021

User Testing for MIMAS MIMAS is an innovative cloud-based transport modelling solution, built by a consortium of companies. Each partner within the consortium brings their own speciality and is responsible for a different aspect of the system. This

Oxfordshire Mobility Model: MIMAS project update – March 2021

Deploying MIMAS in the cloud MIMAS is a cloud-based transport modelling solution, developed for Oxfordshire County Council by a consortium of individual companies, each bringing specialized expertise, and each being responsible for separate components of the system. Previous

Oxfordshire Mobility Model: MIMAS project update – February 2021

A Local Authority Perspective MIMAS is Oxfordshire County Council’s next generation of transport model. It is a scalable transport modelling tool designed for the move towards a self-service paradigm. This month we asked Laura Peacock, Innovation Hub Manager